Hello Guys,

For this Lab Environment we’ll need 4 Virtual Machines:

1 CentOS VM with 8GB Ram and 15GB Disk with IP 192.168.132.100 for the k8s-master;

1 CentOS VM with 4GB Ram and 15GB Disk with IP 192.168.132.101 for the k8s-worker1;

1 CentOS VM with 4GB Ram and 15GB Disk with IP 192.168.132.102 for the k8s-worker2;

1 CentOS VM with 4GB Ram and 15GB Disk with IP 192.168.132.103 for the k8s-worker3;

The OS installation complete, let’s start installing things on it.

First of all, let’s adjust the IP addresses and Hostnames according to the specs above. Also, it’s really good to update the /etc/hosts in every node including those lines:

192.168.132.100 k8s-master

192.168.132.101 k8s-worker1

192.168.132.102 k8s-worker2

192.168.132.103 k8s-worker3

To adjust Hostname, run the command bellow replacing with hostname with the respective Virtual Machine:

For the Master

# hostnamectl set-hostname 'k8s-master'

For the Worker1

# hostnamectl set-hostname 'k8s-worker1'

For the Worker2

# hostnamectl set-hostname 'k8s-worker2'

For the Worker3

# hostnamectl set-hostname 'k8s-worker3'

Great, IN ALL NODES, we need to run the following steps:

Update the system

# yum update -y

Install the yum-config-manager and add the repo to install docker

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

Configure iptables for Kubernetes

# cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# sysctl --system

Add the kubernetes repo needed to find the kubelet, kubeadm and kubectl packages

# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kube*

EOF

Set SELinux to Permissive Mode

# setenforce 0

# sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

Turn off the swap

# swapoff -a

# sed -i '/swap/d' /etc/fstab

Install Kubernetes and Docker

# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes docker-ce docker-ce-cli containerd.io

Start and Enable Docker

# systemctl enable --now docker

Start and Enable Kubernetes

# systemctl enable --now kubelet

Let’s disable the Firewall so we could put things working first, than, correct security (in future post)

# systemctl disable firewalld --now

———————————————————————————————————————————-

Ok, Done those steps in Every Virtual Machine we’ll jump to the k8s-master and run the following steps:

# yum -y install wget lsof

# modprobe br_netfilter

# echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables

Restart

# shutdown -rf now

Start the Cluster

# kubeadm init

After started, copy the kubeadm join line for the use in the future on Worker Nodes (pay attention that you need to copy your output line because every build generates its own token. This example above was the kubeadm join line my installation generates and will not work for you.)

# kubeadm join 192.168.132.100:6443 --token 8u9v7h.1nfot2drqnqw8mps \

--discovery-token-ca-cert-hash sha256:8624e49e1ce94e912ac7c081deabd50196f8526c9a597e0142414204939ff510

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

Let’s install the network using Wave

# export kubever=$(kubectl version | base64 | tr -d '\n')

# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$kubever"

Let’s check Nodes and Pods of our Installation. Note that sometimes it takes a while to put everything Ready and Running, you can watch those commands until everything looks great.

# kubectl get nodes

# kubectl get pods --all-namespaces

———————————————————————————————————————————-

Let’s Install the K8s Dashboard

# vi kubernetes-dashboard-deployment.yaml

Add this content:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

type: NodePort

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kube-system

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kube-system

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kube-system

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-rc7

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kube-system

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

Than Apply the Yaml File

# kubectl apply -f kubernetes-dashboard-deployment.yaml

And check again to see if the Dashboard is Up and Running (note again that the pod might take a while to be up and running, just watch the command to see it working)

# kubectl get pods --all-namespaces

Ensure that we have expose correctly the external port (PortNode) 30001 so it can be possible access the Dashboard over the internet.

# kubectl -n kube-system get services

———————————————————————————————————————————-

Adjust SSL Certificates

# kubectl delete secret kubernetes-dashboard-certs -n kube-system

# mkdir $HOME/certs

# cd $HOME/certs

# openssl genrsa -out dashboard.key 2048

# openssl rsa -in dashboard.key -out dashboard.key

# openssl req -sha256 -new -key dashboard.key -out dashboard.csr -subj '/CN=localhost'

# openssl x509 -req -sha256 -days 365 -in dashboard.csr -signkey dashboard.key -out dashboard.crt

# kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kube-system

Reboot the System

# shutdown -rf now

———————————————————————————————————————————-

Now, let’s create a file named adminuser.yaml (so we can login to the DashBoard) and save the content with this:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

Than Apply

# kubectl apply -f adminuser.yaml

And, create a file named permissions.yaml to give permissions to the user we’ve created with the content:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

Than Apply

# kubectl apply -f permissions.yaml

Done that, it’s time to login to the DashBoard! But first, we need to collect the Token we’ll need to use in order to access. Executing the command, copy the part that says token, but only the token itself.

# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Copy only the token that will look something like this (again this is just the example my script generates, each installation will generate an unique one, be aware of that):

eyJhbGciOiJSUzI1NiIsImtpZCI6IlJvVEotbUdDUndjbXBxdUJvbU41ekxYOE9TWTdhM1NFR1dSc3g5Ul9Dbk0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTlzajl3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI4MjgyYWI5NS03YTViLTQzOTItYWQwNy0yZTY1MTc2YTgxNjIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.NKWvADh7osIFng0xgNpf2C2FBCsijXZr4HOgHTFVZS_TWMLT3wPt6rXhHnnDTu6HXUWd0era9GgXouemliTJKA-HrE8R7ArW88m1jAckR4xVquTLf0Vr1na_nmBVuMTiW9W55b6cr-hZuWuyI4F–91N43-6DzdMXpesHaur6UFojS5dUPoHybIr7HUkLWq8InV7fON06r6zWHCLQVftTYtyaAqbvlJnqcRNlnbyobsRWryjUXQRZrC8Fu1QwCe9aLVmcBByG1Tp3Ao8sA_W-ue8ISpat6_shLJa5zO9vpJEYOzfkdDFhAy6MigXl4b1T4J2q1bhpuIX82dmLVhboA

———————————————————————————————————————————-

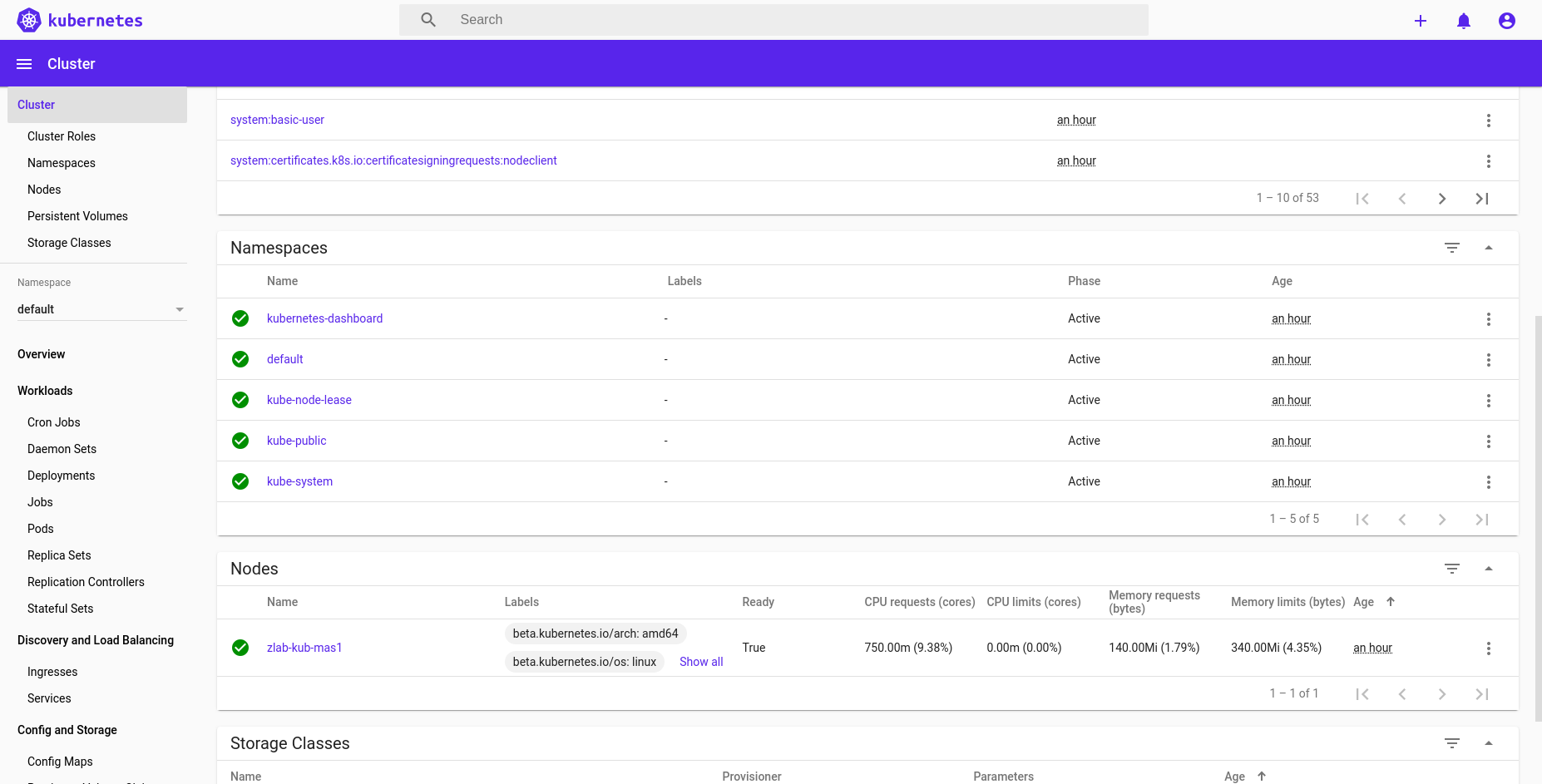

Copied the token, go to your Navigator and type: https://192.168.132.100:30001 choose token, paste the token your script create and you’re inside the DashBoard.

And, the last part, with the Master ready to receive the Nodes, let’s join them!

Log into every Worker node and Run the following command you copied when you run the command kubeadm init at the Masters setup:

# kubeadm join 192.168.132.100:6443 --token 8u9v7h.1nfot2drqnqw8mps \

--discovery-token-ca-cert-hash sha256:8624e49e1ce94e912ac7c081deabd50196f8526c9a597e0142414204939ff510

At the same time you’re running the command on the Nodes, open the “Nodes” session on the Dashboard to check the workers being joined to the Cluster.

That’s it, next steps will be Deploying some application on this infrastructure and make it Reliable – BUT – this is the Subject for a future Post. Hope you all Enjoy, Comment and Share.

Any Comments and Improvements just let me know.